Integrate Spider Bot for Real-Time Data Retrieval on Discord

Unlock seamless integration with Spider Bot for real-time data retrieval directly on your Discord server. This comprehensive guide will walk you through the setup process and provide insights into using slash commands to interact with the bot. Let’s dive in!

Download and Install Spider Bot

Start by downloading and installing Spider Bot. Click the link below to invite the bot to your Discord server:

Step-by-Step Guide to Using Spider Bot

Step 1: Invite the Bot to Your Server

Click the download link above, which will redirect you to the Discord OAuth2 authorization page. Select the server where you want to add the bot and follow the required steps to grant permissions.

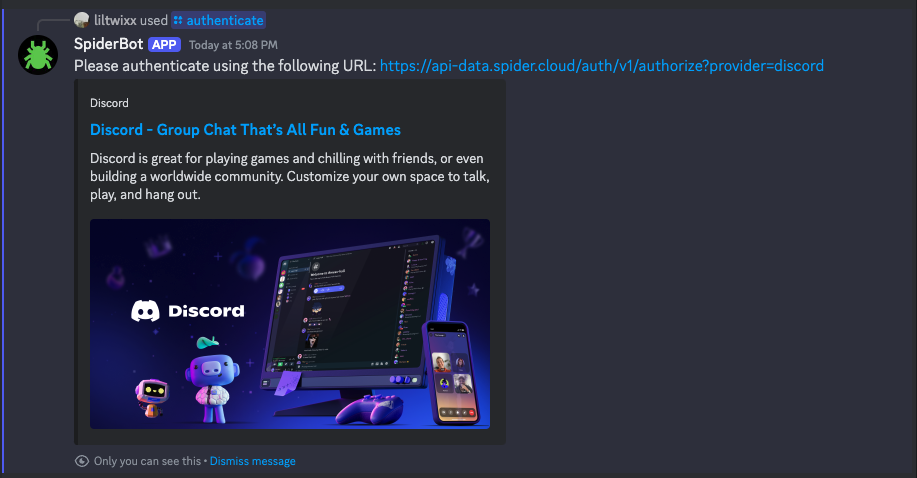

Step 2: Authenticate with Spider

Before using the bot to crawl or scrape content, you need to authenticate. Start with the /authenticate command.

/authenticateThe bot will prompt you to enter your credentials for Spider. Follow the instructions provided by the bot to complete the authentication.

Step 3: Purchase Credits

To use Spider’s crawling and scraping capabilities, you need to purchase credits. Visit the Credits page to buy credits. You can reload credits anytime on Discord with the following command:

/purchase_creditsEnter the number of credits you wish to purchase and follow the bot’s instructions to complete the transaction.

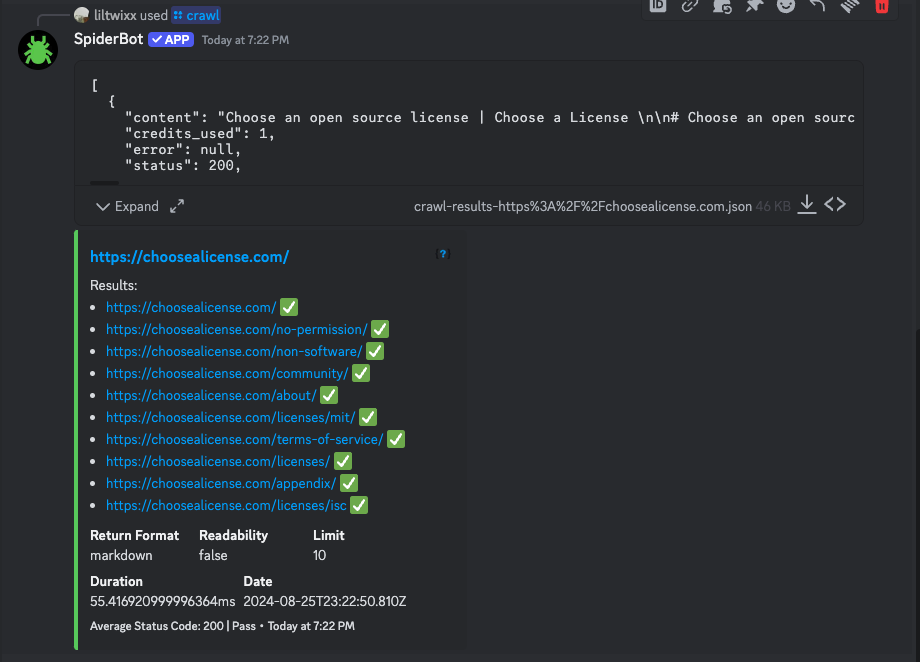

Step 4: Crawl Content

With authentication and sufficient credits, you can start crawling websites. Use the /crawl command to specify the URL and other parameters.

/crawl url:<website_url> limit:<number_of_pages> return_format:<format> readability:<true_or_false>- URL: Enter the URL of the website to be crawled.

- Limit: Specify the number of pages to crawl (up to 40).

- Return Format: Choose the format to return the scraped content (‘raw’, ‘markdown’, ‘commonmark’, or ‘bytes’).

- Readability: Optionally, enable readability to clean up content for large language models (LLMs).

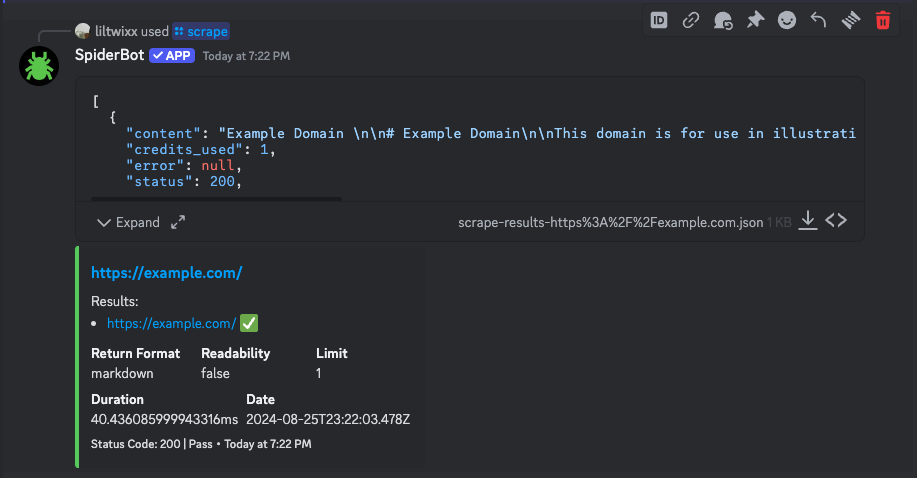

Step 5: Scrape Content

If you need to scrape specific content from a website, use the /scrape command. This command is particularly useful for extracting data from single pages.

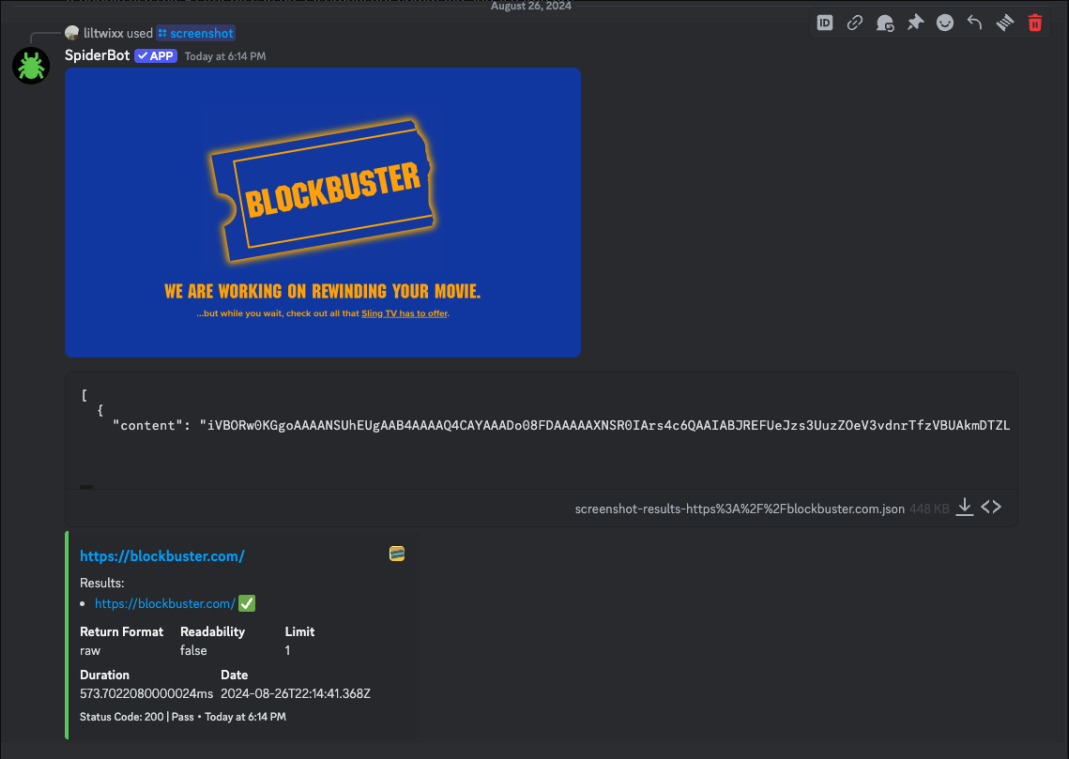

Step 6: Screenshot Website

If you need to take screenshots of specific content from a website, use the /screenshot command. This command is particularly useful for taking multi-page screenshots.

/scrape url:<website_url> return_format:<format> readability:<true_or_false>- URL: Enter the URL of the website to scrape.

- Return Format: Choose the format to return the scraped content (‘raw’, ‘markdown’, ‘commonmark’, or ‘bytes’).

- Readability: Optionally, enable readability to clean up content for LLMs.

Enhance Your Data Interaction with AI Bot

Once Spider is set up, you can utilize AI Bot or other AI tools to maximize the data you retrieve.

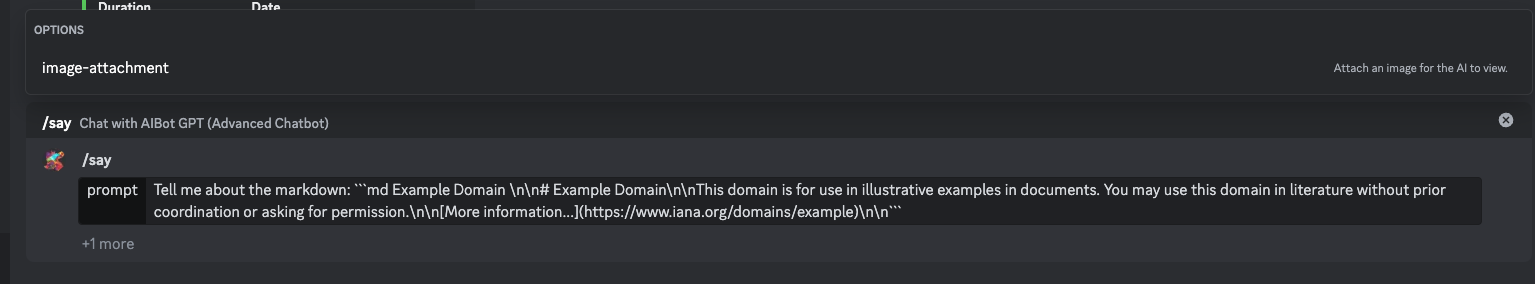

Query Data with AI

Use AI Bot to query the data extracted from websites. You can ask about main topics, recent trends, and more.

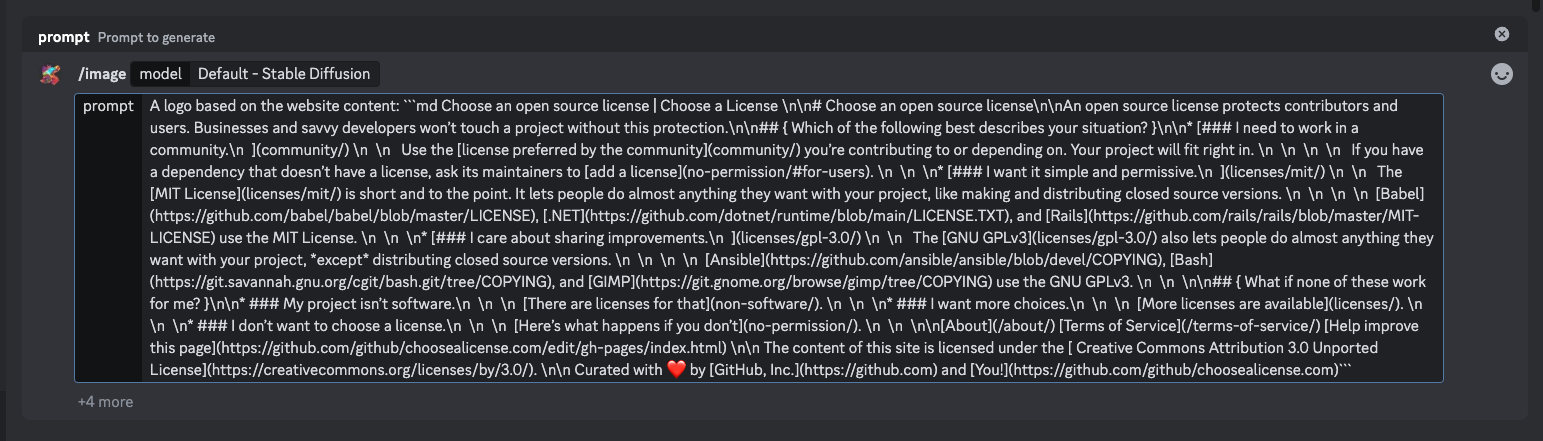

Generate Images from Data

AI tools can transform textual data into images, making it easier to share information with a broader audience. As Spider prepares data formats suitable for LLMs, you can effortlessly discuss various topics on Discord.

Text to Video

AI tools can also transform text into videos for you with a simple prompt and some data from the website, making your data even more engaging.

Conclusion

By integrating the Discord Spider Bot, you can efficiently retrieve and process real-time data on your Discord server. The predefined slash commands simplify bot interactions, enabling you to crawl, scrape, and analyze web content with ease. This guide will help you set up the Spider Bot and unlock a new level of data interaction on your server.

For more information or assistance, refer to the official documentation or contact the support team. Happy crawling and scraping!